DIFAI: Diverse Facial Inpainting using StyleGAN Inversion

International Conference on Image Processing (ICIP), 2022

Dongsik Yoon1, Jeong-gi Kwak1, Yuanming Li1, David K Han2, Hanseok Ko1

1Korea University, 2Drexel University

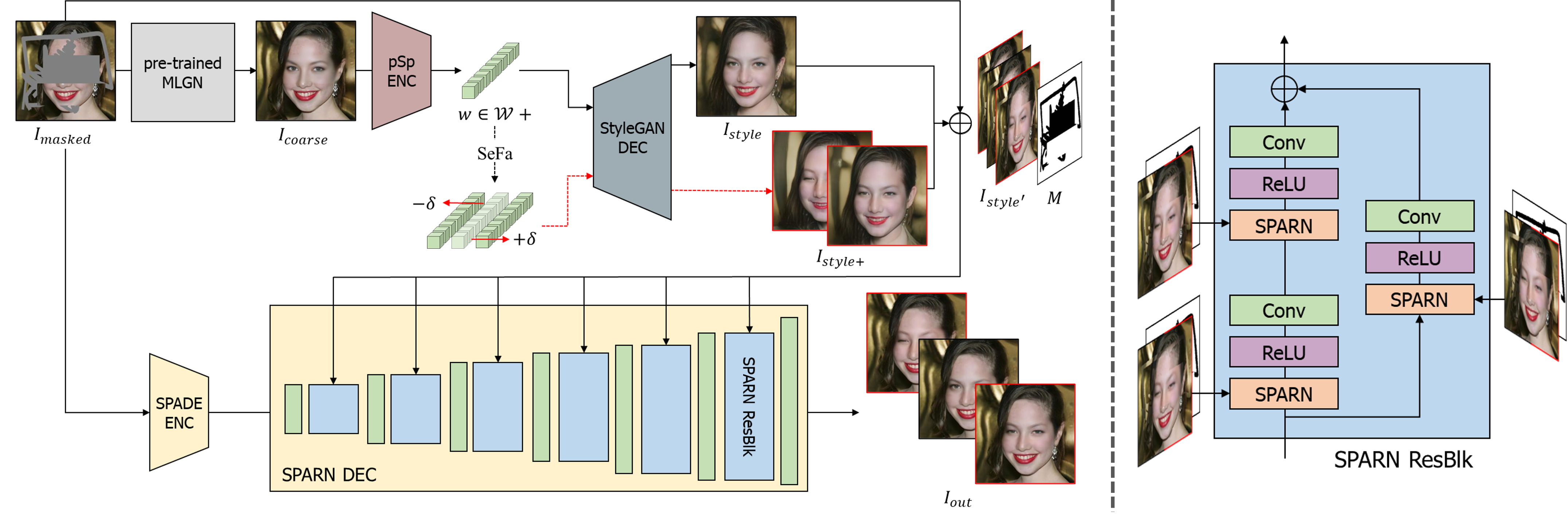

Overview of proposed diverse facial inpainting using StyleGAN inversion

Abstract

Image inpainting is an old problem in computer vision that restores occluded regions and completes damaged images. In the case of facial image inpainting, most of the methods generate only one result for each masked image, even though there are other reasonable possibilities.

To prevent any potential biases and unnatural constraints stemming from generating only one image, we propose a novel framework for diverse facial inpainting exploiting the embedding space of StyleGAN. Our framework employs pSp encoder and SeFa algorithm to identify semantic components of the StyleGAN embeddings and feed them into our proposed SPARN decoder that adopts region normalization for plausible inpainting. We demonstrate that our proposed method outperforms several state-of-the-art methods.

Proposed Methods

Summary of the proposed framework. Istyle′ and M are the input of SPARN decoder. In the SPARN decoder, each region normalization layer uses the Istyle′ and M to modulate the layer activations.

Qualitative Comparison

This figure describes the images generated by the proposed method and those generated by the other methods. Our model is superior to all the others in the aspect of image quality and plausibility.

Continuous Inpainting

Input

Ours1

Ours2

Ours3

Ours4

Illustration of continuous inpainting results using SeFa, which gradually adjusts delta. By simply altering this parameter, our model generates a series of smoothly varying in-painted images following relevant directions defined in the latent space of StyleGAN.