Your Super Resolution Model is not Enough

for Tackling Real-World Scenarios

International Conference on Computer Vision Workshop (ICCVW), 2025

Dongsik Yoon, Jongeun Kim

HDC LABS

Abstract

Despite remarkable progress in Single Image SuperResolution (SISR), traditional models often struggle to generalize across varying scale factors, limiting their realworld applicability.

To address this, we propose a plugin Scale-Aware Attention Module (SAAM) designed to retrofit modern fixed-scale SR models with the ability to perform arbitrary-scale SR. SAAM employs lightweight, scaleadaptive feature extraction and upsampling, incorporating the Simple parameter-free Attention Module (SimAM) for efficient guidance and gradient variance loss to enhance sharpness in image details. Our method integrates seamlessly into multiple state-of-the-art SR backbones (e.g., SCNet, HiT-SR, OverNet), delivering competitive or superior performance across a wide range of integer and non-integer scale factors.

Extensive experiments on benchmark datasets demonstrate that our approach enables robust multi-scale upscaling with minimal computational overhead, offering a practical solution for real-world scenarios.

Proposed Methods

Comparison between the existing feature adaptation block and the proposed SAAM block.

Quantitative Comparison

This table shows that baseline models are each trained on data that match a single output scale; as a result, most cannot infer images at unseen scales. Our unified model, with only a lightweight plug-in, delivers comparable or superior results across many scales.

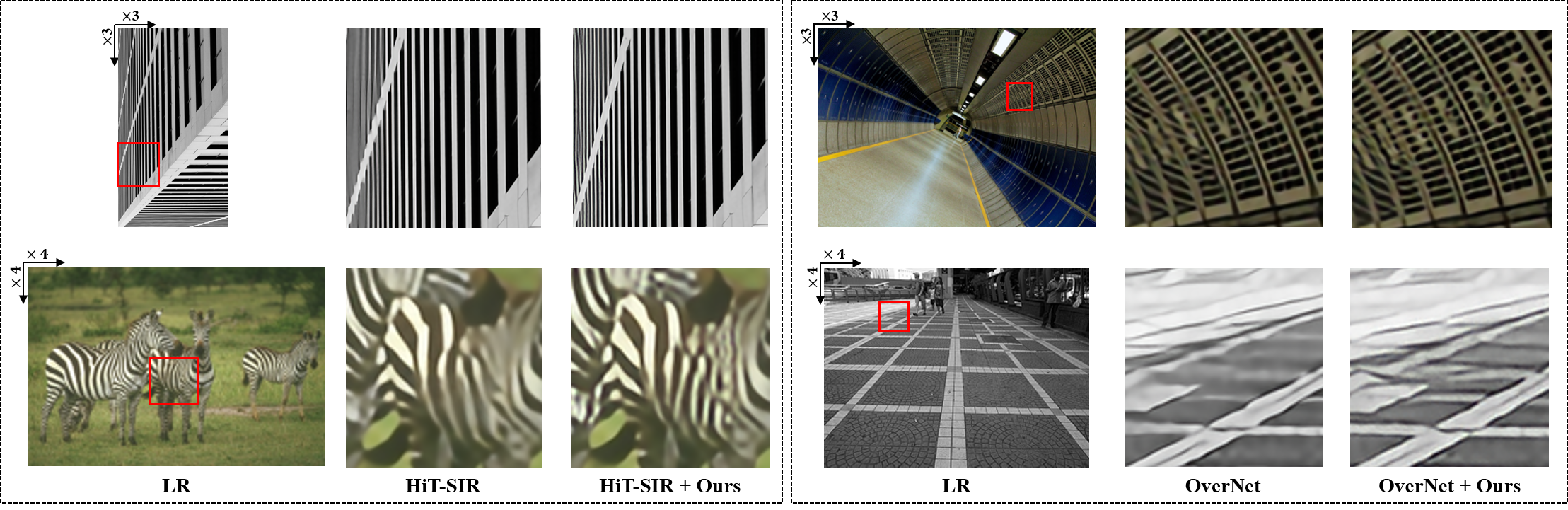

Qualitative Comparison

Qualitative comparison by integrating our method into the baseline model on BSD100 and Urban100.